SPELL: A Simple PE-Based Accelerator for Large Language Models

https://drive.google.com/file/d/1PB7ph6FT7vyKrkH3T91WcR5mgkj0zduP/view?usp=sharing

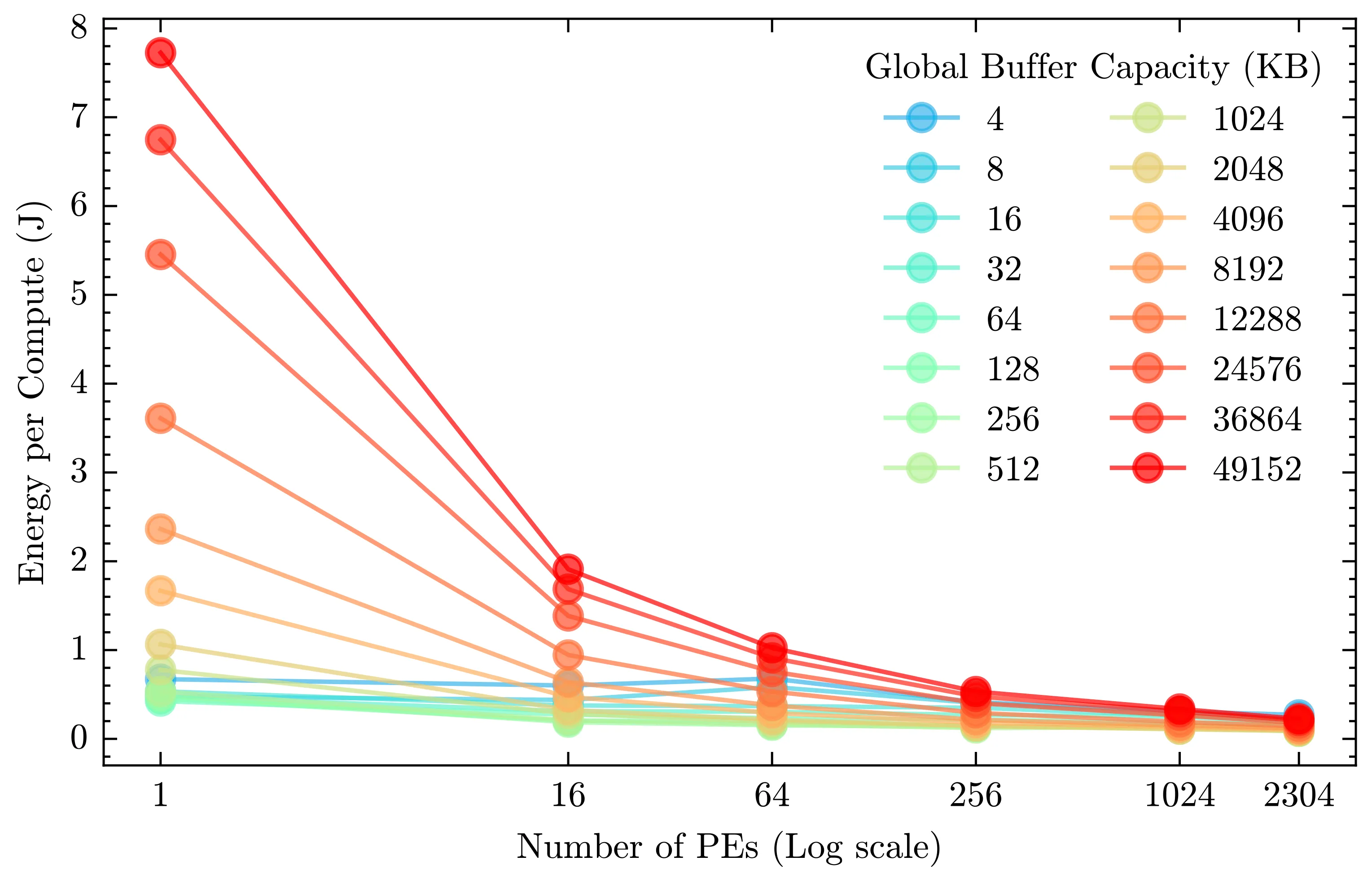

This was the final project I worked on for MIT’s Hardware Architectures for Deep Learning course, taught by Vivienne Sze and Joel Emer. We used Timeloop/Accelergy to model and optimize a digital DNN accelerator for LLM workloads. Because LLMs and MLPs both utilize dense matrix-matrix multiplications, we draw inspiration from Google’s TPUv1, which was designed to handle the high percentage (61%) of MLP workloads in Google’s datacenters at the time.